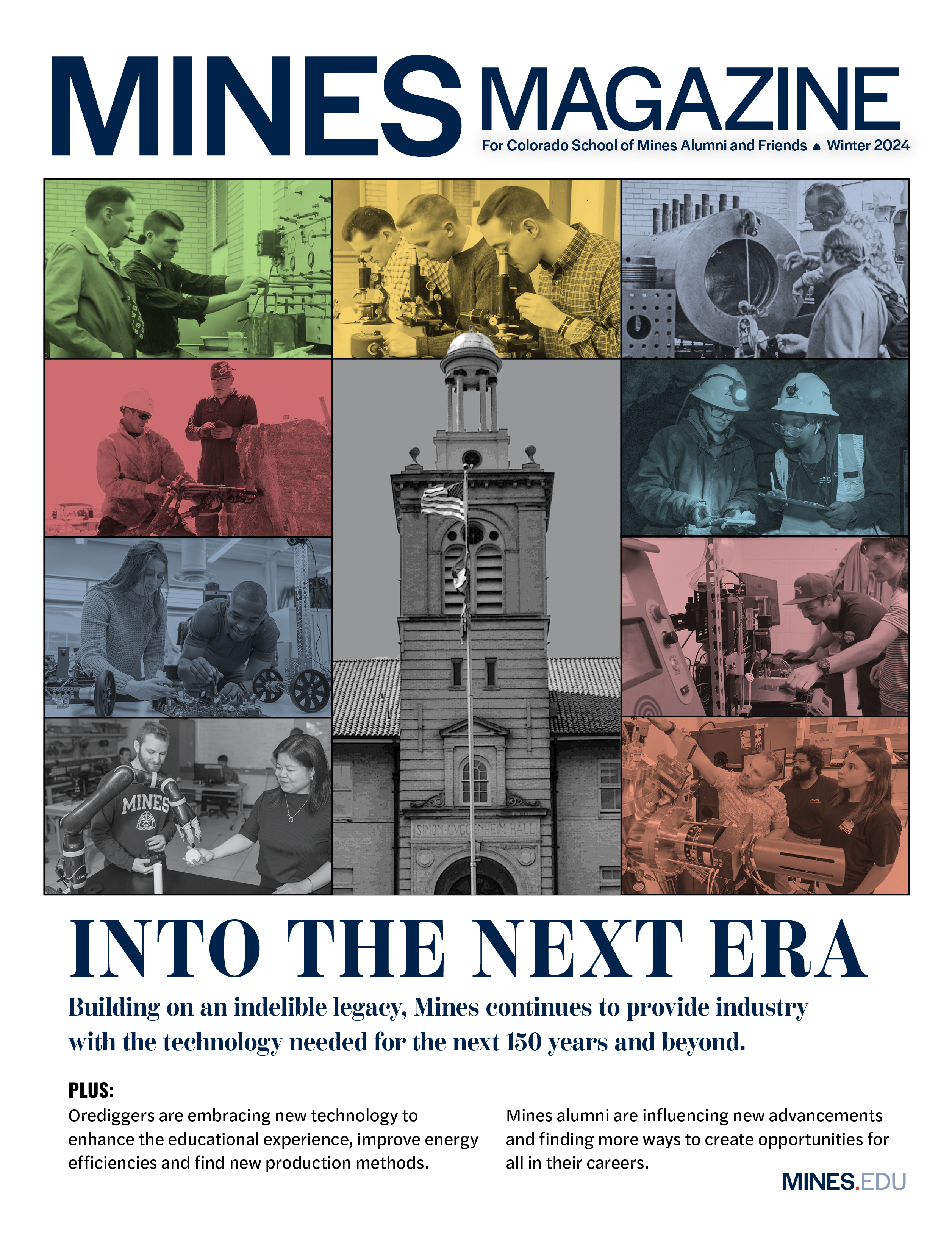

In tech we trust—to a point

Once a novelty, robotic vacuums are now a common sight in homes. Chances are, you’ve seen or used one, and manufacturers have even expanded to produce automated mops and lawnmowers.

While exponential growth of such devices in our daily lives seems a foregone conclusion, the technology still needs to mature and earn the trust of humans—much like a teenager asking to drive the family car.

That hurdle will be tougher to clear than any technological challenge, according to Xiaoli Zhang, associate professor of mechanical engineering.

Take self-driving cars. The technology simply can’t guarantee reliability yet, Zhang said. But even more important, the technology raises many concerns for the people who will use them.

“It’s not just whether you can achieve 100 percent safety,” Zhang said. “Humans have to understand how it’s done—if an AI can explain how decisions are made, it makes humans feel safer.”

Robotic vacuums are everywhere because the stakes are lower and the technology is easier to explain—with fewer ethical questions. That’s also true for carts that follow shoppers around or robots that deliver food and other products—applications Zhang has seen from startups.

With the challenges of full autonomy, Zhang sees human-robot cooperation as the immediate future, particularly in industry, and rejects the idea that robots will eliminate existing jobs.

“In manufacturing, robots will take on the repetitive work, such as assembly, but humans will be the supervisor,” Zhang said. “A robot could be sent into dangerous environments for mining exploration or search-and-rescue, but a human will provide high-level decision-making.”

As the field of automated robotics grows, “to commercialize automated robots and bring practical, wide impact on everyone’s daily life, the key for robotics companies will be identifying pain points and finding those well-validated technologies, not necessarily the cutting edge, to meet those needs,” Zhang said. “The technology has to actually solve problems—otherwise, it’s just in the demo stage.”

What should robots do if they’re given a command that’s immoral?

That’s the question we asked Tom Williams, assistant professor of computer science, whose research focuses on human-robot interaction. Here’s what he said:

“Language-capable robots have unique persuasive power due to their naturally high levels of perceived social and moral agency. When a robot receives an unethical command, it not only needs to reject that command, it needs to reject it in the right way.

“Our work on moral language generation has shown that humans are sensitive to the phrasing robots use when rejecting commands. Robots must carefully tailor the strength of their responses to the severity of a human’s violation to be effectively persuasive and avoid being perceived as overly harsh—or not harsh enough. To achieve this balance, we’re exploring how robots can use different politeness strategies and moral frameworks to ground their rejections.

“We’re currently focusing on Confucian role ethics and the ways it might be used by robot designers. There are at least three ways designers can employ Confucian principles when developing this technology:

- Designing knowledge representations and algorithms that consider roles and relationships.

- Explicitly enabling robots to reject inappropriate commands by appealing to roles rather than notions of right and wrong.

- Assessing whether the robot’s design encourages its teammates to adhere to moral principles.”